2 ways in which managed SD-WAN accelerates VDI applications

Virtual Desktop infrastructure is commonly used by organizations to manage IT application access for employees in small remote locations, contract workers, and temporary employees. VDI offers these organizations flexibility and ease while maintaining data and application security. However, VDI applications are susceptible to latency and low speeds. End-user experience is severely impacted by the quality of the connection between the client and the server. This blog explains why MPLS based solutions are unsuitable for these applications and explores how managed SD-WAN solves the performance problems associated with VDI implementations.

WHY MPLS IS NOT THE ANSWER FOR VDI PERFORMANCE

There are several reasons why MPLS is unsuitable for addressing the needs of the technology service providers, chief among them being time to market, cost and security concerns.

Time to market & Cost: Provisioning MPLS, especially over International routes, is a complex activity requiring purchase and commissioning of equipment, inter-operator SLA agreements, etc. The typical lead time for MPLS implementation is 60-90 days if not more. For technology service providers, this amount of lead time is problematic as their clients expect the service to start as soon as the contracts are signed. In addition to the rollout time, MPLS links are also expensive. Besides, most carriers their bundle last-mile connectivity along with the offering, thus increasing the overall cost.

Not suitable for all deployments: MPLS is not flexible and hence not suitable for all deployment scenarios. In cases where the employee base is minimal, or the project duration is brief, it may not be viable to extend a dedicated MPLS link. In other cases, a client overly concerned about security may not allow CPEs to be placed in their premises, thus ruling out MPLS. In such cases, access over the internet remains the only option. Accessing these Citrix or VMware applications over an internet link leads to severe degradation in performance.

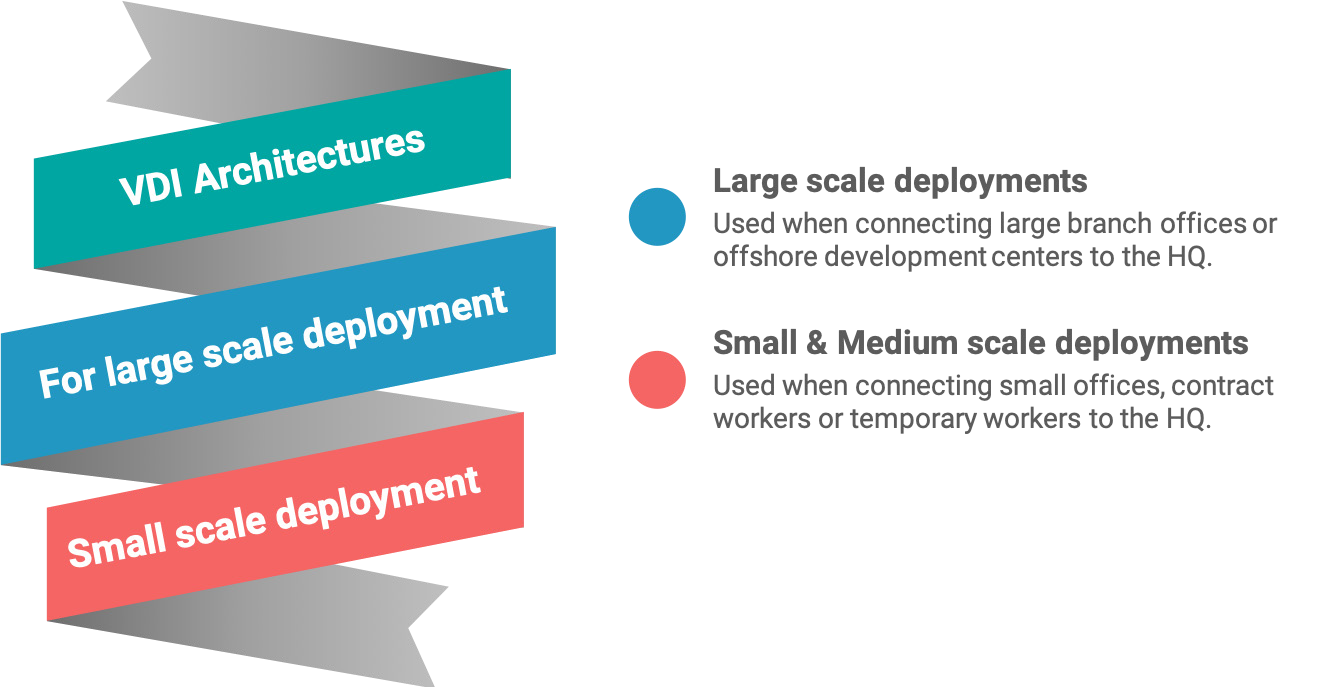

Large-Scale & Small-Scale Deployments

A VDI use case for large deployments

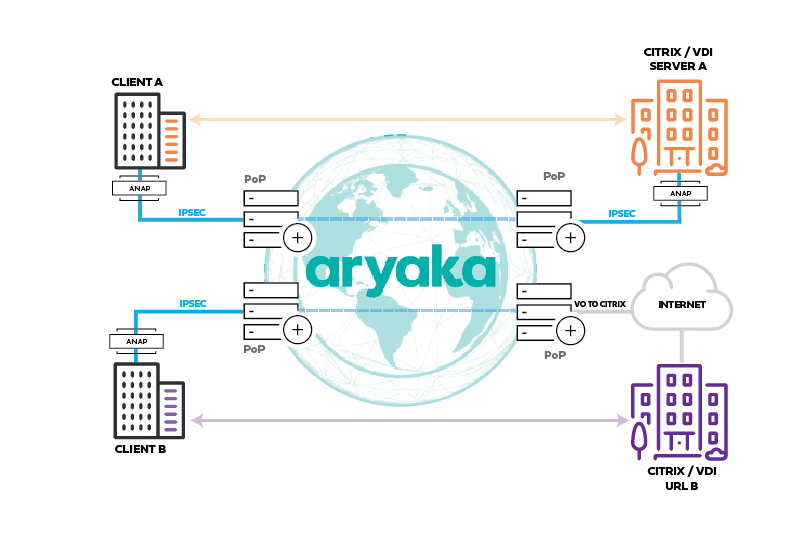

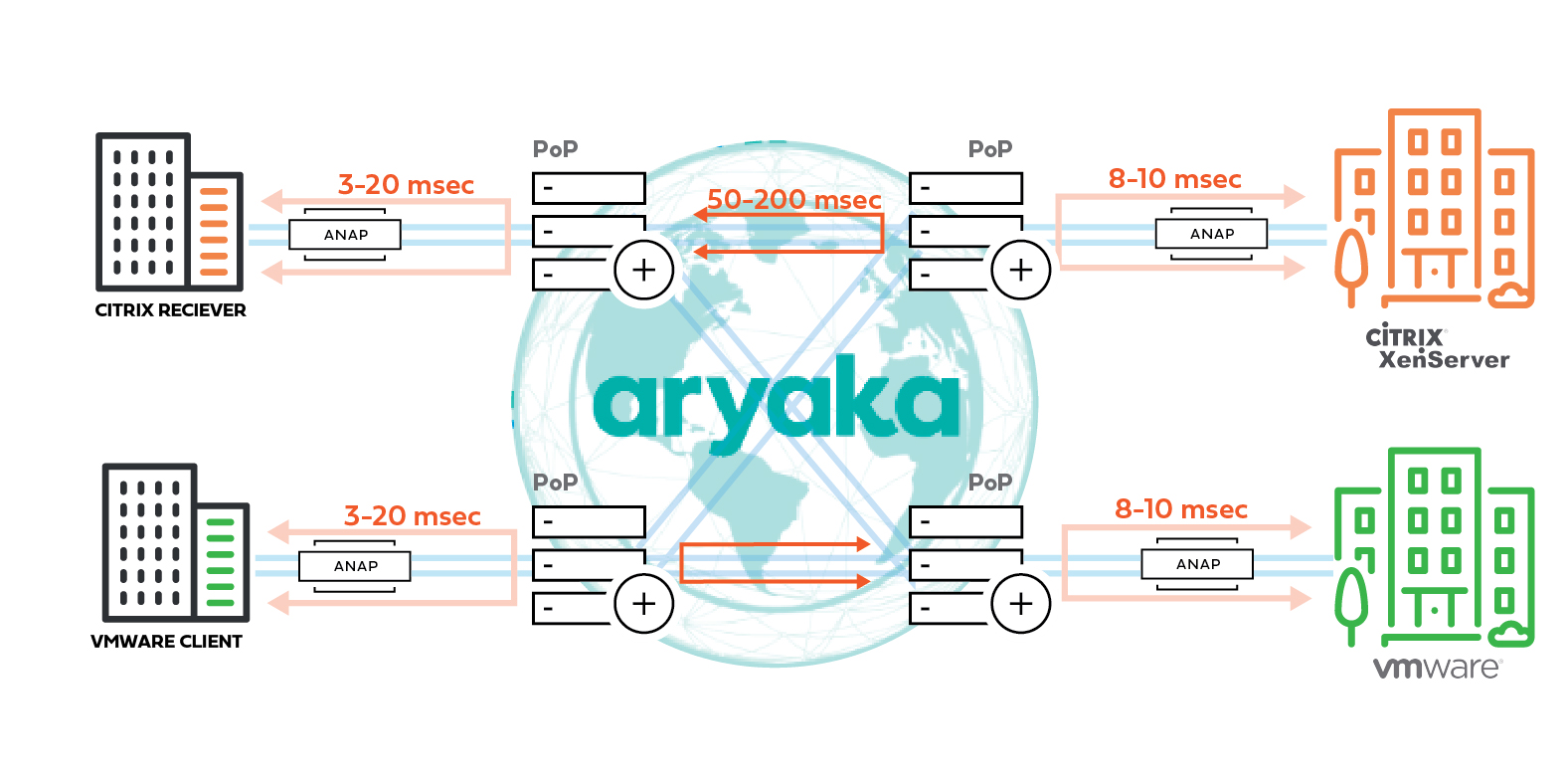

The first use case is an end-to-end link from the client to the server through Aryaka’s Layer 2 core network. Large deployments with many client instances benefit most from this approach. In the figure, connectivity between Client-A and Server-A follows this architecture.

This architecture requires the presence of Aryaka’s Network Access Port (ANAP) at the client and server end. Both the client/branch location and the server locations are connected to the Aryaka Layer 2 network over IPSec tunnels. The presence of ANAPs at both ends of the connection enables WAN optimization features like TCP optimization and data de-duplication. Also, the MyAryaka cloud-based portal enables monitoring of the first-mile, last-mile, and middle-mile sectors.

A VDI use case for small and medium deployments

In addition to the full-fledged access, Citrix and VDI allow publication of any resource or application as an URL. A URL is the preferred mode of access when only a limited number of users access a small set of applications. This scenario is typical when providing short term access to contract workers or temporary staff. The benefits are flexibility and ability to reuse the existing access controls without significant changes. The published content or application is accessed through the Citrix storefront and receiver. The system administrator can also specify the application to be used to open the published content. Though flexible, this mode of operation affects application per¬formance. As the content is published as a URL and accessed over the Internet, it is subject to the latency and packet loss issues associated with this channel.

Aryaka’s innovative approach solves this problem without the need for a CPE at the server location. In the figure, connectiv¬ity between Client-B and Server-B follows this architecture. The ANAP connects Client-B to the Aryaka core through an IPSec tunnel. VDI traffic is carried over the low latency Aryaka core and exits from the PoP that is closest to the server location; the last mile is over the broadband Internet. Application performance is improved as the traffic travels over Aryaka’s low latency Layer 2 core network in the middle-mile.

Aryaka’s SD-WAN as-a-Service delivers SaaS acceleration through a private, software-defined Layer 2 network. Through the strategic distribution of PoPs, our private network is within 1-5 milliseconds from major business centers around the world.

Aryaka’s proprietary and patented optimization stack is baked into our fully-meshed private global network, freeing businesses from the hassles of maintaining and managing appliances, while providing optimized performance. In an international enterprise WAN scenario, the middle-mile is the longest path and hence responsible for most of the connectivity problems. Aryaka effectively solves this problem by deploying a fully meshed private Layer 2 network for the middle mile.

ARYAKA SMARTLINK – IMPROVING QUALITY OF FIRST MILE AND LAST MILE CONNECTIVITY

SmartLINK operates on the last-mile internet links that connect the ANAP SD-Branch appliance to the Aryaka PoP that provides the closest entry point into Aryaka’s global L2 core network (which guarantees completely deterministic QoS SLAs to any global destination). Aryaka’s PoPs are distributed in a way that covers 95% of the world’s knowledge workers population with internet access latency of less than 30ms. SmartLINK consists of several technologies that -when combined- minimize latency and eliminate jitter and packet loss over last-mile internet connections, which typically consist of redundant internet links provided by different ISPs.

Click here to book a demo and experience the solution.