Gen AI Security: The Need for Unified SASE as a Service

Generative AI and large language models (LLMs) like GPT are transforming industries, driving innovation in automation, customer experience, and data analysis. However, as enterprises adopt these technologies, they face growing network security challenges that cannot be overlooked. These advanced AI systems rely on massive datasets, constant network connectivity, and cloud-based infrastructure, creating new vulnerabilities that traditional security approaches may struggle to address. Below, we explore the top five network security challenges facing generative AI and LLMs today.

1. Data Privacy and Protection Risks

Generative AI models depend on vast datasets to learn and operate effectively. These datasets often include sensitive or proprietary information, making them prime targets for attackers. If security controls aren’t stringent, unauthorized access can lead to data breaches, exposing customer information, intellectual property, or internal communications.

For enterprises deploying generative AI, data encryption, access controls, and secure transfer protocols are essential to ensure sensitive data remains protected as it moves across the network. Adopting solutions like Zero Trust Network Access (ZTNA) can help verify users and devices before granting access to critical AI resources.

2. Increased Attack Surface from Cloud Dependencies

Most generative AI applications are hosted in cloud environments, which, while scalable and efficient, introduce additional security risks. These platforms rely on consistent, high-speed network connectivity and multiple integrations across APIs, third-party services, and hybrid cloud systems. Each layer increases the attack surface, creating more entry points for malicious actors.

To mitigate this risk, organizations need secure cloud access solutions, like Cloud Access Security Brokers (CASB) and Secure Web Gateways (SWG), to monitor and control AI-related traffic. A robust Firewall-as-a-Service (FWaaS) strategy can also help block unauthorized access and malicious activity across distributed cloud environments.

3. Denial of Service (DoS) and Resource Overloading

Generative AI and LLMs require significant computing power and continuous access to resources. This makes them particularly vulnerable to Denial of Service (DoS) attacks, where bad actors overload systems with traffic to disrupt AI performance or take models offline. Even brief downtimes can have severe consequences for businesses relying on AI-driven automation or customer services.

To defend against these threats, enterprises need intelligent traffic routing through SD-WAN to optimize network performance and reroute traffic during attacks. Additionally, implementing intrusion detection and prevention systems (IDPS) helps monitor for suspicious activity and mitigate DoS attempts in real time.

4. Model Poisoning and Data Integrity Attacks

Generative AI models are only as good as the data they’re trained on. Attackers can exploit this by injecting malicious or inaccurate data into AI training pipelines—a tactic known as model poisoning. This corrupts the model’s behavior, leading to biased outputs, security vulnerabilities, or even harmful responses that erode trust and cause financial losses.

Ensuring network visibility and enforcing strict data validation policies are crucial to detect and stop anomalous inputs. Organizations should also adopt end-to-end observability and AI-specific security controls to safeguard training environments and data sources from tampering.

5. Lack of Real-Time Threat Detection and Response

AI and LLM-driven applications operate at high speed, and security teams must respond just as quickly to evolving threats. However, many enterprises lack real-time threat detection and automated mitigation capabilities across their networks. This creates a dangerous gap where attackers can exploit vulnerabilities before they’re identified and patched.

By leveraging AI-powered security analytics, businesses can enable real-time threat detection and automated responses to secure network traffic and prevent breaches. Implementing a Unified SASE as a Service platform combines advanced security functions with optimized network performance, ensuring threats are addressed proactively without impacting productivity.

Building a Resilient Network for Gen AI with Aryaka Unified SASE as a Service

Generative AI and large language models bring incredible opportunities, but they also introduce complex security challenges that enterprises must tackle head-on. Aryaka’s Unified SASE as a Service is purpose-built to address the network security challenges posed by generative AI and large language models (LLMs), offering an integrated approach that combines robust security with optimized performance.

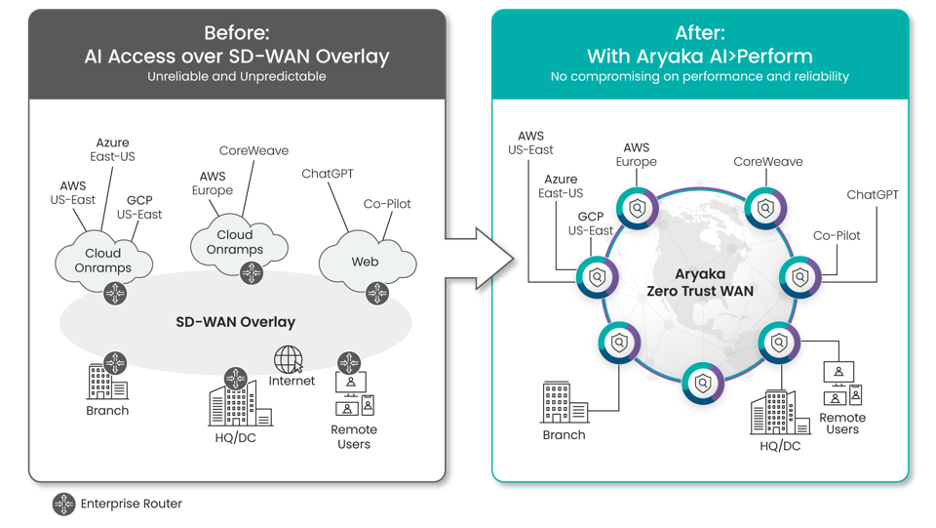

Thanks to our Zero Trust WAN and Next Gen Firewall (NGFW), Aryaka ensures that only verified users and devices can access critical AI infrastructure, mitigating unauthorized access, traffic interception, and data poisoning. Our platform also incorporates Secure Web Gateway (SWG) and Cloud Access Security Broker (CASB) capabilities to secure traffic flowing to and from the cloud, providing visibility into those flows, and enforcing compliance with corporate policies.

On the performance side, Aryaka’s AI Acceleration global private backbone eliminates the unpredictability of the public internet, ensuring low-latency, high-speed connectivity required for AI workloads and large data transfers. The platform’s intelligent traffic routing and built-in WAN optimization combat challenges like resource overloading and network congestion, delivering consistent performance even under heavy processing demands.

Aryaka OnePASS Architecture relies on three core architectural principles to deliver secure and seamless network access:

- Single Management Pane offers centralized visibility and control for both network and security services, enabling enterprises and managed services providers to leverage AI/ML analytics for proactive issue resolution and consistent oversight.

- Unified Control Plane ensures policies are centrally orchestrated and consistently applied across users and locations, minimizing misconfigurations—a common cause of security breaches.

- Distributed Data Plane enforces security closer to users and applications, whether at the edge, in the cloud, or within PoPs, providing scalable, location-aware protection without redundancy.

Combined with real-time network observability and analytics, Aryaka enables enterprises to proactively monitor, detect, and resolve threats before they disrupt generative AI operations, creating a secure and high-performing environment for innovation.

To protect your AI investments and ensure optimal performance, enterprises need the advanced capabilities, visibility, and efficiencies provided by Aryaka Unified SASE as a Service. By combining performance and security, organizations can harness the full potential of generative AI while mitigating risks.

Ready to safeguard your AI initiatives?

Reach out and see firsthand how Aryaka’s Unified SASE as a Service platform can secure and optimize your network for the demands of generative AI and large-scale models.